Single-view novel view synthesis (NVS), the task of generating images from new viewpoints based on a single reference image, is important but challenging in computer vision. Recent advancements in NVS have leveraged Denoising Diffusion Probabilistic Models (DDPMs) for their exceptional ability to produce high-fidelity images. However, current diffusion-based methods typically utilize camera pose matrices to globally and implicitly enforce 3D constraints, which can lead to inconsistencies in images generated from varying viewpoints, particularly in regions with complex textures and structures.

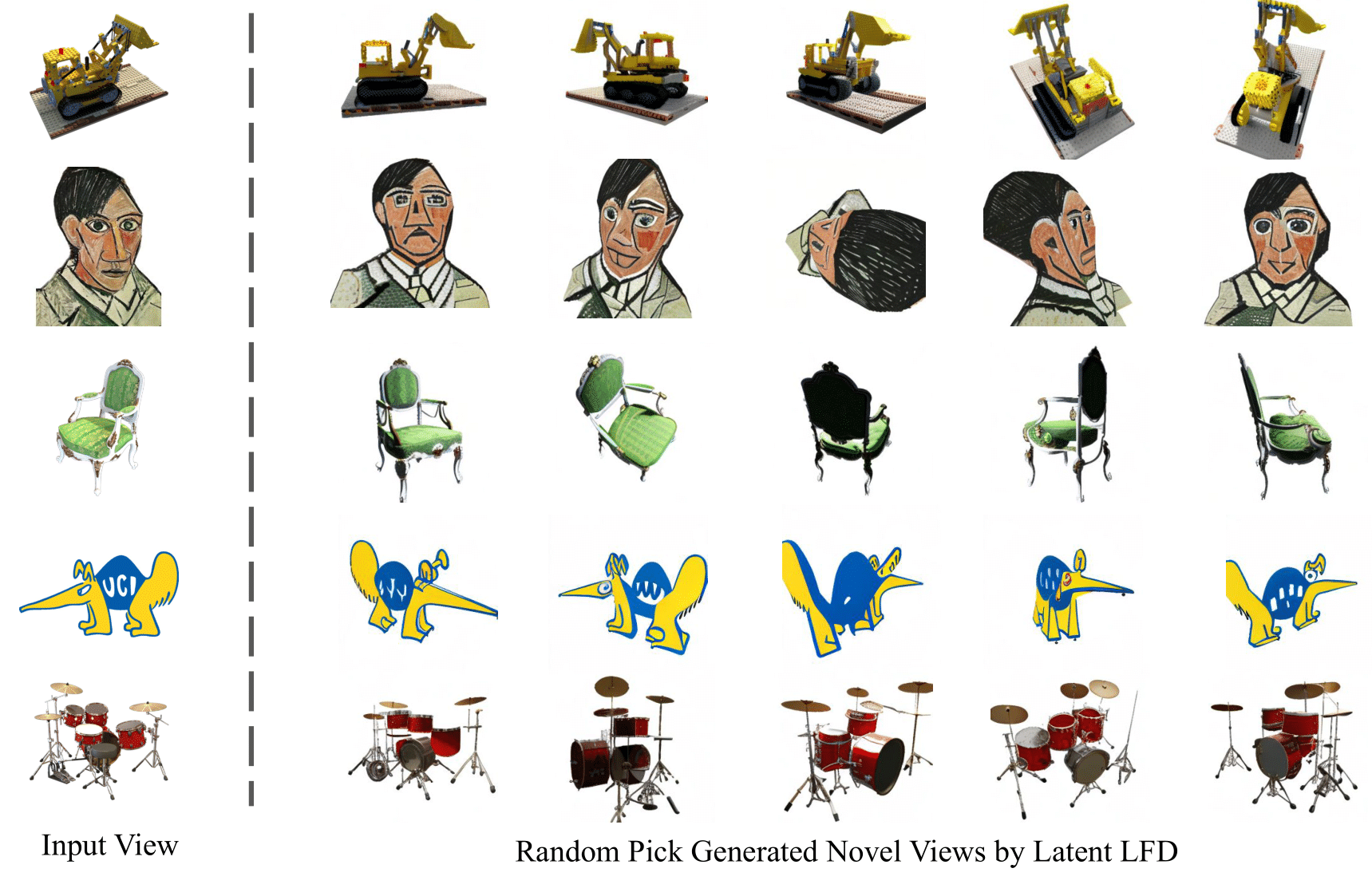

To address these limitations, we present Light Field Diffusion (LFD), a novel conditional diffusion-based approach that transcends the conventional reliance on camera pose matrices. Starting from the camera pose matrices, LFD transforms them into light field encoding, with the same shape as the reference image, to describe the direction of each ray. By integrating light field encoding with the reference image, our method imposes local pixel-wise constraints within the diffusion process, fostering enhanced view consistency. Our approach not only involves training image LFD on the ShapeNet Car dataset but also includes fine-tuning a pre-trained latent diffusion model on the Objaverse dataset. This enables our latent LFD model to exhibit remarkable zero-shot generalization capabilities across out-of-distribution datasets like RTMV as well as in-the-wild images. Experiments demonstrate that LFD not only produces high-fidelity images but also achieves superior 3D consistency in complex regions, outperforming existing novel view synthesis methods.

We present a novel framework called Light Field Diffusion, an end-to-end conditional diffusion model designed for synthesizing novel views using a single reference image. Instead of directly using camera pose matrices, we employ a transformation into pixel-wise light field encoding. This approach harnesses the advantages of local pixel-wise constraints, resulting in a significant enhancement in model performance.

We train the Light Field Diffusion on both latent space and image space. In latent space, we finetune a pre-trained latent diffusion model on the Objaverse dataset. In image space, we train a conditional DDPM on the ShapeNet Car dataset.

Our method is demonstrated to effectively synthesize novel views that maintain consistency with the reference image, ensuring viewpoint coherence and producing high-quality results. Our latent Light Field Diffusion model also showcases the exceptional ability for zero-shot generalization to out-of-distribution datasets such as RTMV and in-the-wild images.

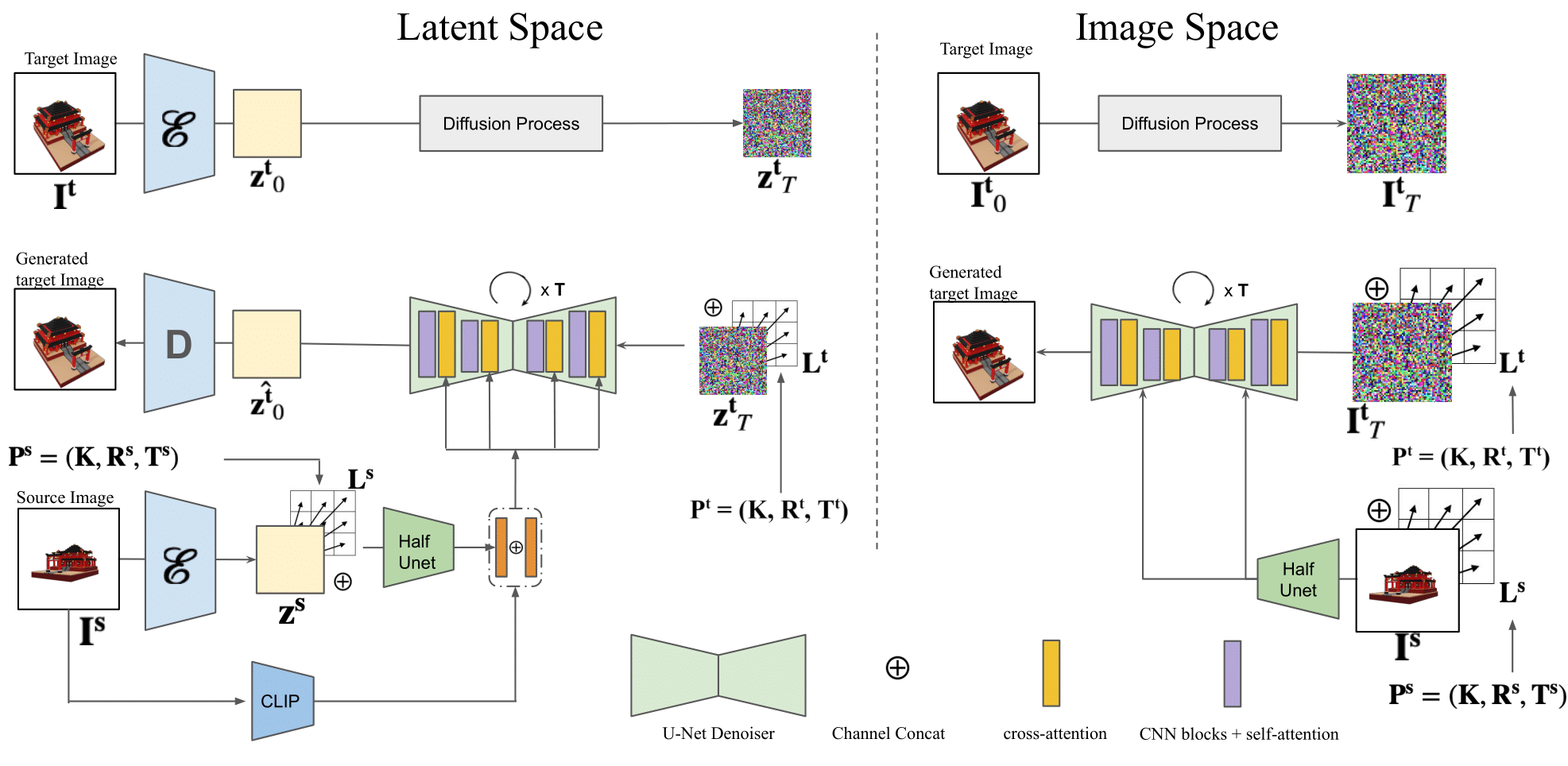

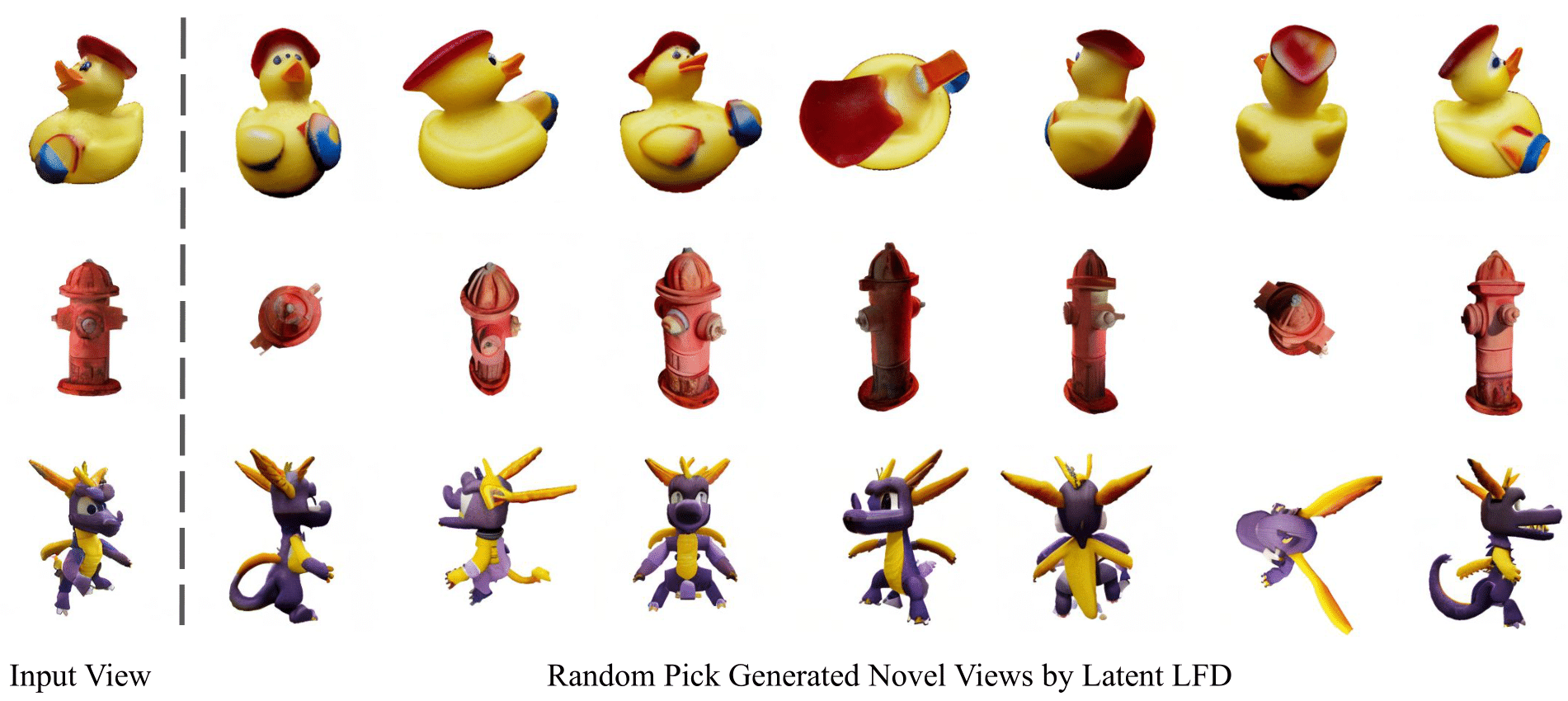

NVS on the Objaverse and GSO datasets. The first two rows show results on the Objaverse dataset. The third row shows results on the GSO dataset. We set the first image as a reference, randomly generate 10 novel views, and directly show results without cherry-picking.

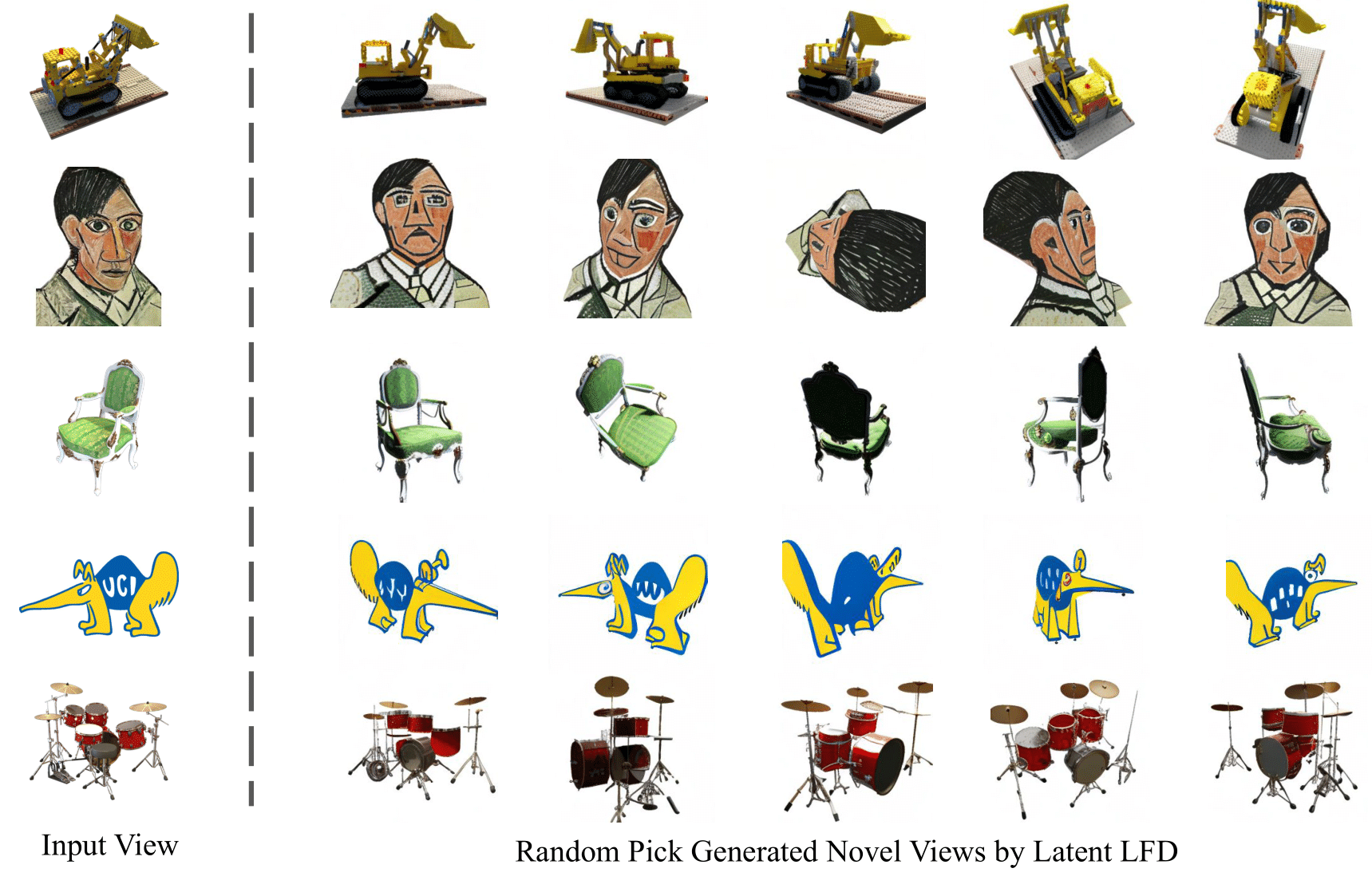

NVS on in-the-wild images. The first column displays the input view, while the subsequent columns showcase the diverse and high-resolution novel views generated by our model. Our latent LFD successfully generates images from arbitrary views with consistent appearance.

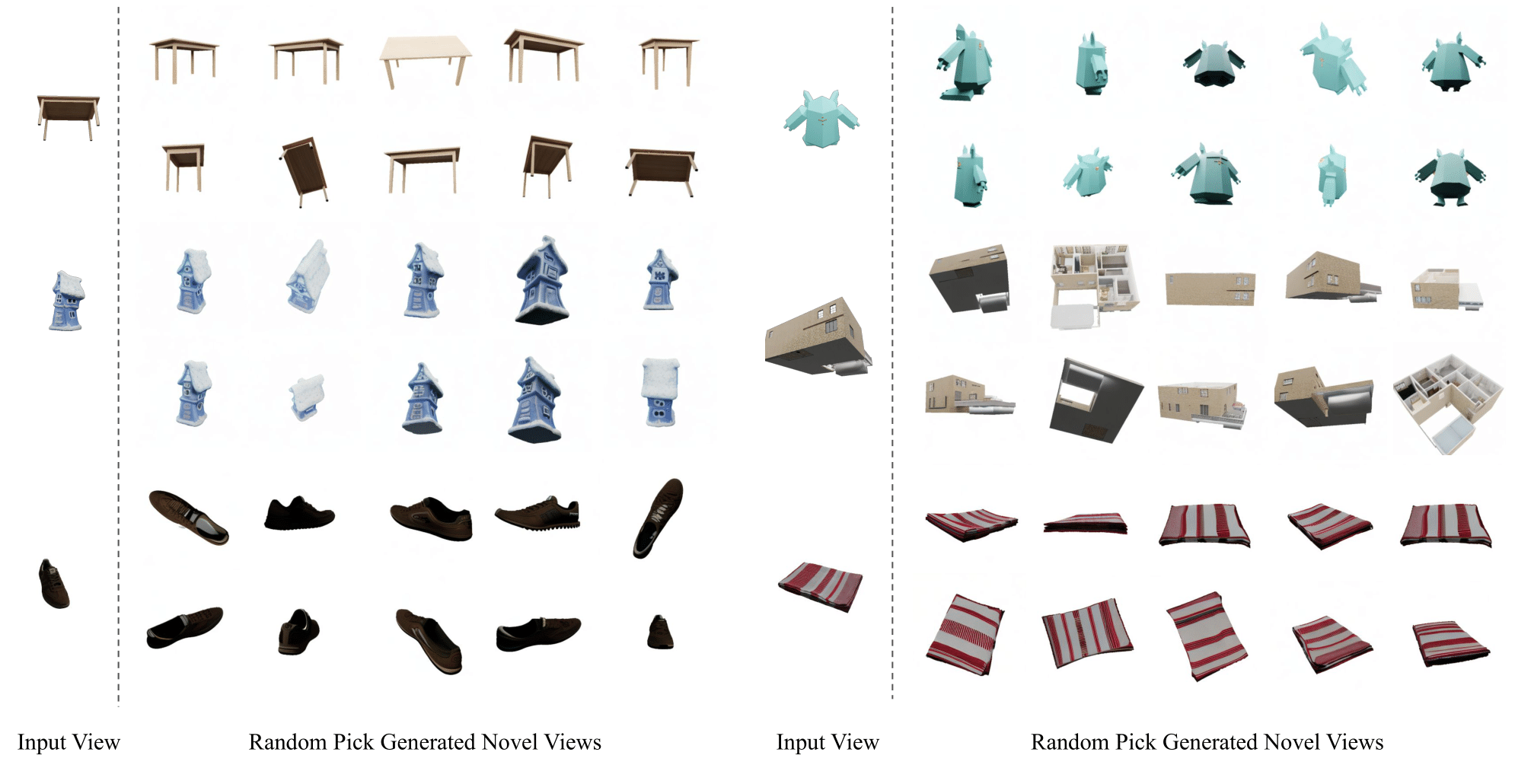

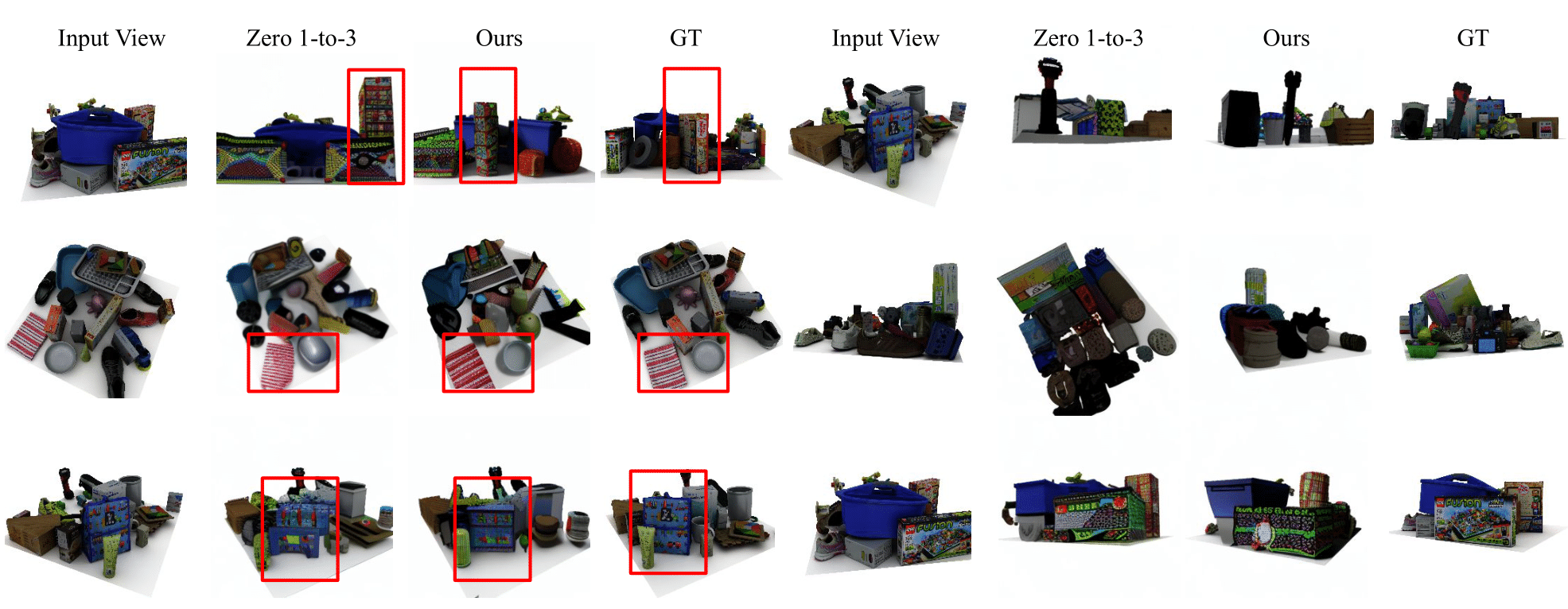

NVS on the RTMV dataset. Note that RTMV dataset is out-of-distribution and is a very challenging task. All these examples illustrate the advanced capability of our model in generating coherent and detailed images under large camera viewpoint changes.

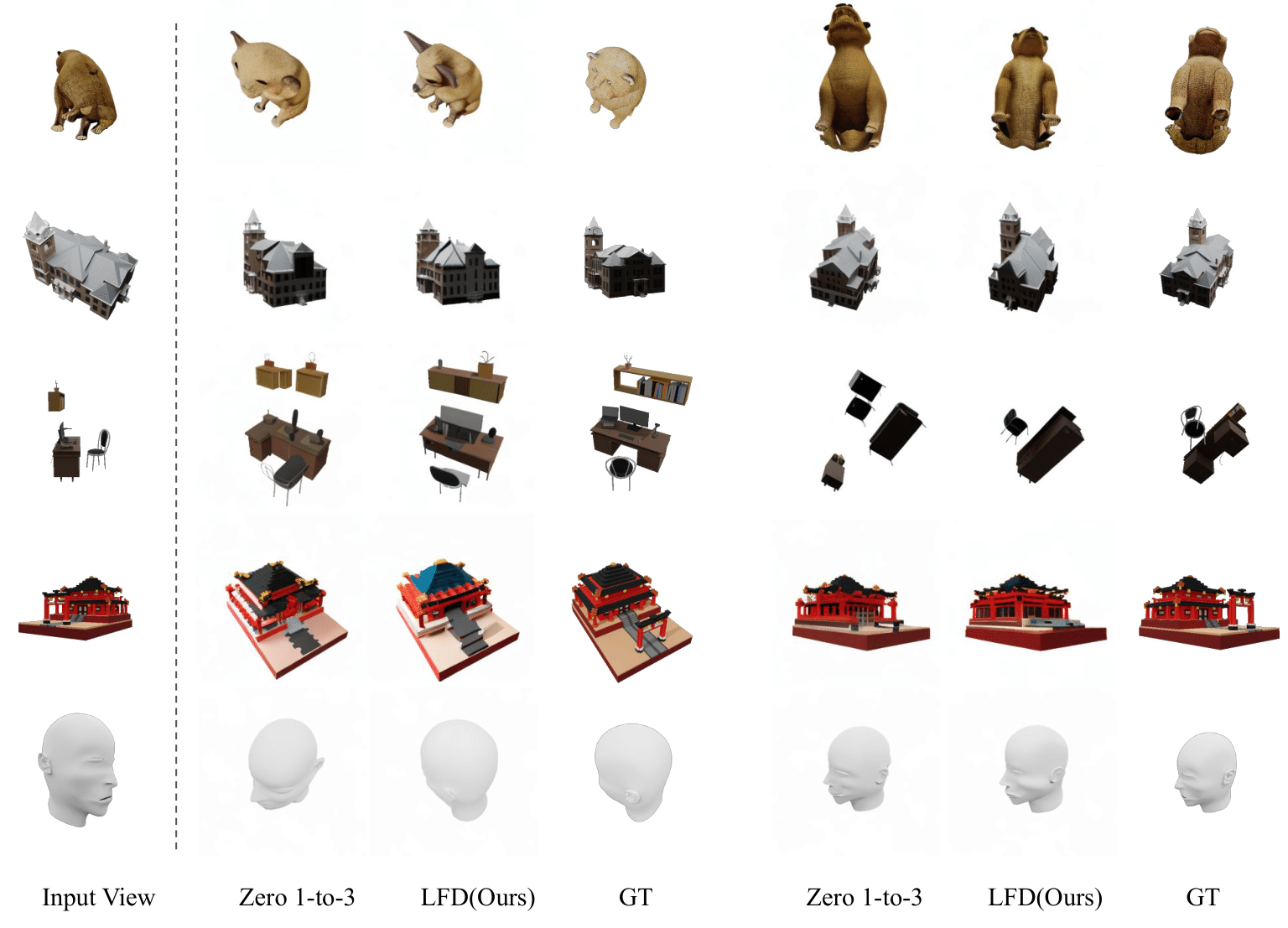

NVS on the Objaverse dataset. We use the first image of each scene as an input view and synthesize two randomly novel views. Our method can generate more consistent and more view-guaranteed results compared with baselines.

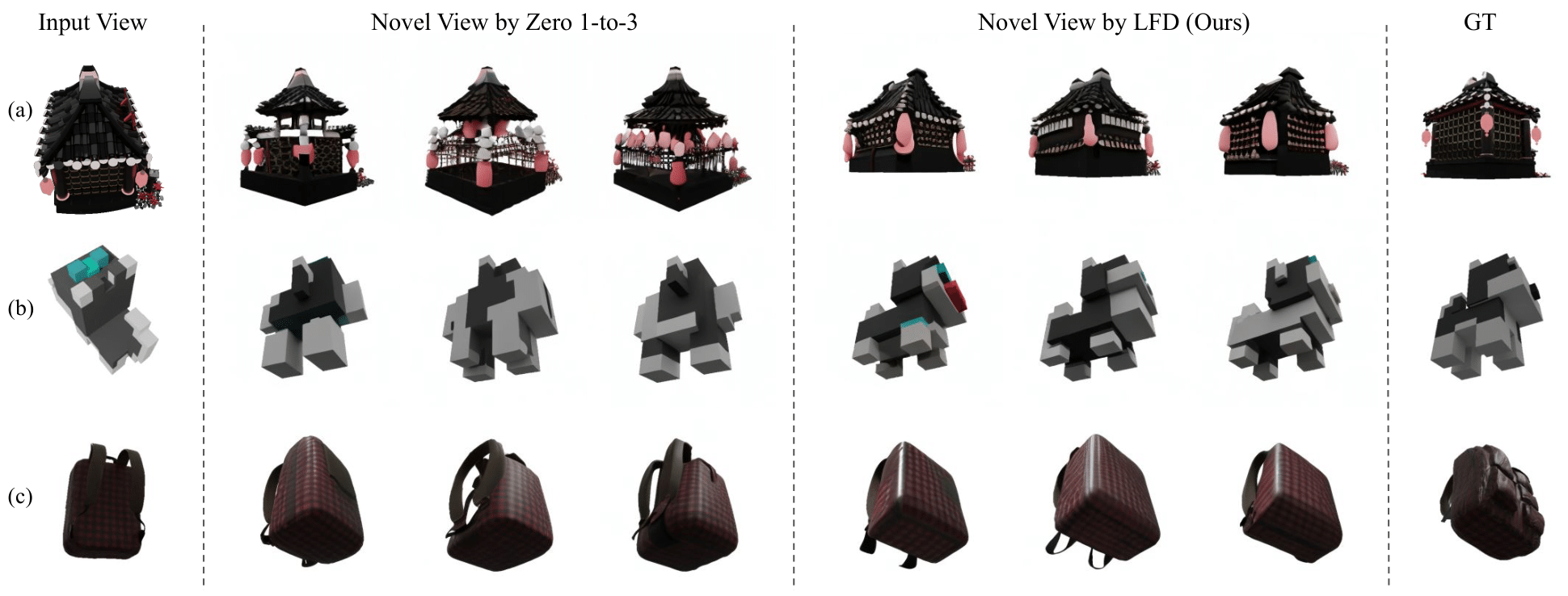

Comparison of diversity. Given an input view and a target viewpoint, we randomly generate 3 samples with different seeds. The baseline model sometimes cannot guarantee the coherence of viewpoint across different samples.

@article{xiong2023light,

author = {Yifeng Xiong and Haoyu Ma and Shanlin Sun and Kun Han and Hao Tang and Xiaohui Xie},

title = {Light Field Diffusion for Single-View Novel View Synthesis},

journal = {arxiv preprint},

year = {2023},

}